Solutions & Technologies

AI Data Center Networking

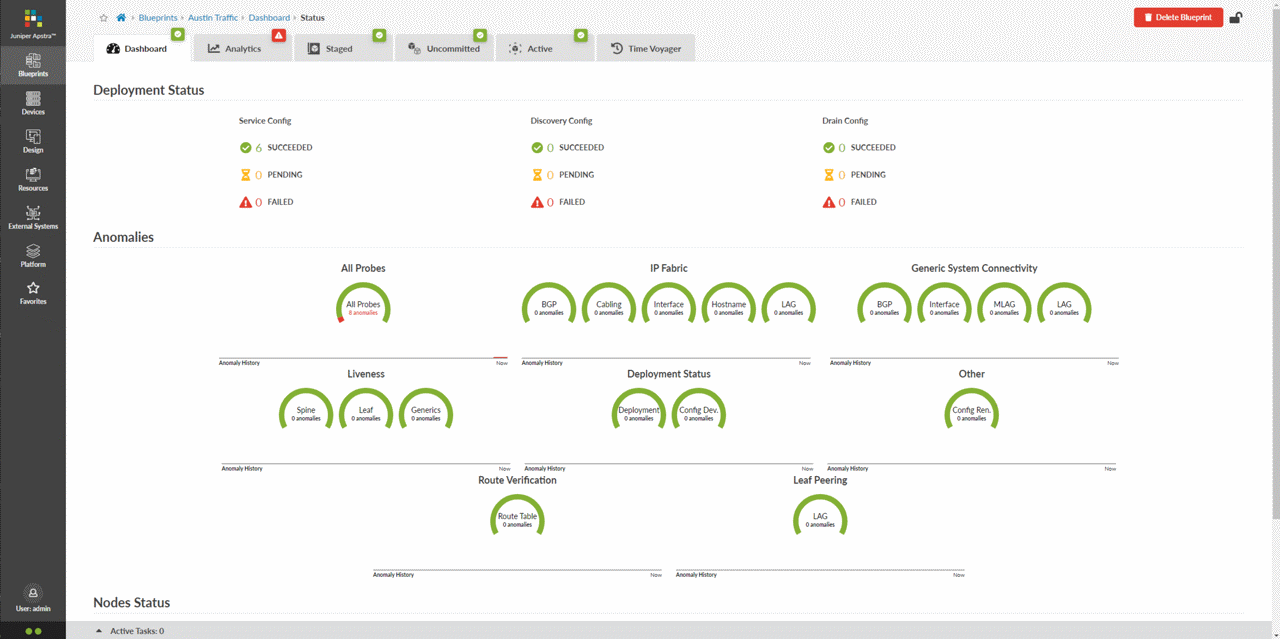

Simple and seamless operator experiences that save time and money

Recent advances in generative artificial intelligence (AI) have captured the imaginations of hundreds of millions of people around the world and catapulted AI and machine learning (ML) into the corporate spotlight. Data centers are the engines behind AI, and data center networks play a critical role in interconnecting and maximizing the utilization of costly GPU servers.

AI training, measured by job completion time (JCT), is a massive parallel processing problem. A fast and reliable network fabric is needed to get the most out of your expensive GPUs. The right network is key to optimizing ROI and the formula is simple — design the right network, save big on AI applications.

How Juniper can help

Juniper’s AI Data Center solution is a quick way to deploy high performing AI training and inference networks that are the most flexible to design and easiest to manage with limited IT resources. We integrate industry-leading AIOps and world-class networking technologies to help customers easily build high-capacity, easy-to-operate network fabrics that deliver the fastest JCTs while maximizing GPU utilization using limited IT resources.

Simplified operations for up to 90% lower networking-related OPEX

Our operations-first approach saves time and money without vendor lock-in. Juniper Apstra's unique intent-based automation shields operators from network complexity and accelerates deployment. New AIOps capabilities in the data center with Marvis, our virtual network assistant (VNA), further enhance operator and end-user experiences, enabling customers to proactively see and fix problems quickly. The result is up to 85% faster deployment times when using Juniper for AI Data Center networking.

100% Interoperable with all leading GPUs, fabrics and switches

Proprietary solutions that lock in enterprises can stifle AI innovation. Juniper’s solution assures the fastest innovation, maximizes design flexibility, and prevents vendor lock-in for backend, frontend, and storage AI networks. Our open, AI-optimized Ethernet solution ensures feature velocity and cost savings, while Apstra, is the only solution for data center operations and assurance across multivendor networks, With Juniper, you have the freedom to choose any GPU, fabric and switch to best meet individual Data Center networking needs.

Turnkey solutions result in up to 10X better reliability

Juniper delivers turnkey solutions for deploying high-performing AI data centers with flexibility and ease, from switching and routing to operations and security. Juniper validated designs (JVDs) simplify deployment and troubleshooting processes so you can build the next great AI model with confidence and speed. Silicon diversity in our products drives scale, performance, and customer flexibility, while integrated security protects AI workloads and infrastructure from cyberattacks.

Explore Juniper’s AI Data Center

Discover end-to-end secure solutions that enable you to build high performing AI Data Centers with flexibility and ease. Watch the explainer video to learn how Juniper’s Open AI-Optimized Ethernet solution ensures feature velocity and cost savings.

Related Solutions

Data Center Networks

Simplify operations and assure reliability with the modern, automated data center. Juniper helps you automate and continuously validate the entire network lifecycle to ease design, deployment, and operations.

Data Center Interconnect

Juniper’s DCI solutions enable seamless interconnectivity that breaks through traditional scalability limitations, vendor lock-in, and interoperability challenges.

Converged Optical Routing Architecture (CORA)

CORA is an extensible, sustainable, automated solution for IP-optical convergence. It delivers the essential building blocks operators need to deploy IP-over-DWDM transformative strategies for 400G networking and beyond in metro, edge, and core networks.

IP Storage Networking

Simplify your data storage and boost data center performance with all-IP storage networks. Use the latest technologies, such as NVMe/RoCEv2 with 100G/400G switching or NVMe/TCP, to build high-performance storage or converge your storage and data into a single network.

CUSTOMER SUCCESS

SambaNova makes high performance and compute-bound machine learning easy and scalable

AI promises to transform healthcare, financial services, manufacturing, retail, and other industries, but many organizations seeking to improve the speed and effectiveness of human efforts have yet to reach the full potential of AI.

To overcome the complexity of building complex and compute-bound machine learning (ML), SambaNova engineered DataScale. Designed using SambaNova Systems’ Reconfigurable Dataflow Architecture (RDA) and built using open standards and user interfaces, DataScale is an integrated software and hardware systems platform optimized from algorithms to silicon. Juniper switching moves massive volumes of data for SambaNova’s Datascale systems and services.

Resource Center

Whitepapers

Videos

Blogs

Infographics

AI Data Center Networking FAQs

What types of businesses are prioritizing the deployment of AI/ML solutions in their data centers today?

AI demand is driving hyperscalers, cloud providers, enterprises, governments, and educational institutions to incorporate AI into their business systems to automate operations, generate content and communications, and improve customer service.

What is the difference between the training and inference stages of AI?

AI models are built using carefully crafted data sets during the training stage. Training happens across multiple GPUs spanning tens, hundreds and even thousands of GPUs in a cluster — all connected across a network and constantly exchanging data with each other. After this training stage, the model is essentially complete. During the inference stage, users interact with the model, which can recognize images or generate pictures and text to provide answers to user questions. Training is typically an offline operation, whereas inference is generally online.

What are the components of AI data center network infrastructure solution, and how does Juniper enable them?

Massive AI data sets are creating the need for greater compute power, faster storage, and high-capacity, low-latency networking. Juniper helps meet these requirements in the following ways:

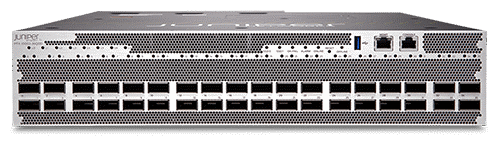

- Compute: AI/ML compute clusters place heavy requirements on the inter-node network. Lowering job completion time (JCT) is essential, and the network plays a key part in the efficient operation of the cluster. Juniper offers a range of high-performance, non-blocking switches with deep buffer capability and congestion management that, when architected optimally, eliminate any network bottleneck.

- Storage: In AI/ML clusters and high-performance computing, rarely can an entire data set or model be stored on the compute nodes, so a high-performance storage network is required. Juniper QFX Series Switches can be used for IP storage connectivity; they offer full support for Remote Direct Memory Access (RDMA) networking, including Non-Volatile Memory Express/RDMA over Converged Ethernet (NVMe/RoCE) and Network File System (NFS)/RDMA.

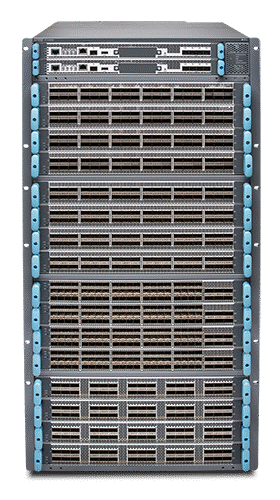

- Network: AI training models involve large, intense computations distributed over hundreds or thousands of CPU, GPU, and TPU processors. These computations demand high-capacity, horizontally scalable, and error-free networks. Juniper QFX switches and PTX Series Routers support these large computations within and across data centers with industry-leading switching and routing throughput and data center interconnect (DCI) capabilities.

How does the Juniper AI Data Center simplify operations in the Data Center?

Apstra is Juniper’s leading platform for data center automation and assurance. It automates the entire network lifecycle, from design through everyday operations, across multivendor data centers with continuous validation, powerful analytics, and root-cause identification to assure reliability. With Marvis VNA for the data center, this information is brought from Apstra into the Juniper Mist cloud and presented in a common VNA dashboard for end-to-end insight. Marvis VNA for data center also provides a robust conversation interface (using GenAI) to dramatically simplify knowledgebase queries.

How does the Juniper AI Data Center Networking solution address congestion management, load balancing, and latency requirements for maximizing AI performance?

Juniper high-performance, non-blocking data center switches provide deep buffering and congestion management to eliminate network bottlenecks. To balance traffic loads, we support dynamic load balancing and adaptive routing. For congestion management, Juniper fully supports Data Center Quantized Congestion Notification (DCQCN), Priority Flow Control (PFC), and Explicit Congestion Notification (ECN). Finally, to reduce latency, Juniper uses best-of-breed merchant silicon and custom ASIC architectures that maximize buffers where needed, virtual output queuing (VOQ), and cell-based fabrics within our spine architectures.

What does Juniper offer for IP storage?

Our portfolio includes open, standards-based switches that provide IP-based storage connectivity using NVMe/RoCE or NFS/RDMA (see earlier FAQ). Our IP Storage Networking solution designs can scale from a small four-node configuration to hundreds or thousands of storage nodes.